Since January of 2023, I’ve been experimenting with using large language models (LLMs) like ChatGPT as a teaching tool in my history classes at UC Santa Cruz. I’ve been thinking about the implications of AI in teaching since I first began using GPT-2 back in 2019. I have also been following along avidly as my wife Roya Pakzad works on testing the human rights impacts of AI systems (Roya was a consultant for OpenAI in 2022, where she served on the “red team” for adversarial testing of a pre-release version of GPT-4; you can read her account of that work here).

What follows are some thoughts about what I believe to be a novel use of LLMs: using them to simulate interactive historical settings as part of a university assignment. The results of these early trials are why I am personally much more excited about generative AI than many of my colleagues — though I also concede that in the short term, cheating will be a major problem.

In the second half of this post, I go into detail about what exactly I mean by “simulating history.” I am under no illusions that these simulations are accurate: they are littered with confidently-stated falsehoods and hallucinations. Sometimes, though, hallucinations can be a feature, not a bug.

At the end of this post, I include links to detailed prompts which you can use to simulate different historical settings, or customize to use with Claude or ChatGPT (the free versions of both work about equally well, though GPT-4 works best). I invite readers to share their experiences in the comments.

Teaching will get weirder — and that’s probably a good thing

In the long term, I suspect that LLMs will have a significant positive impact on higher education. Specifically, I believe they will elevate the importance of the humanities.

If this happens, it will be a shocking twist. We’ve been hearing for over a decade now that the humanities are in crisis. When faced with raw data about declining enrollments and majors like this and this, it is difficult not to agree. From the perspective of a few years ago, then, the advent of a new wave of powerful AI tools would be expected to tip the balance of power, funding, and enrollment in higher education even further toward STEM and away from the humanities.

But the thing is: LLMs are deeply, inherently textual. And they are reliant on text in a way that is directly linked to the skills and methods that we emphasize in university humanities classes.

What do I mean by that? One of the hallmarks of training in history is learning how to think about a given text at increasingly higher levels of abstraction. We teach students how to analyze the genre, cultural context, assumptions, and affordances of a primary source — the unspoken limits that shaped how, why, and for whom it was created, and what content it contains.

For example, imagine a high school student who is asked to analyze the first letter of Hernán Cortés to the Emperor Charles V. The student might dutifully paraphrase the conquistador’s account of the Aztec capital of Tenochititlan — including this famously jarring description of Aztec temples as “mosques” (mezquitas). A history major would be able to go further. Why did Cortés use this confusing term? Cortés was born during the final decade of the Reconquista. For this reason, he was intimately acquainted with non-Christian religiosity — but only in the context of Muslim religiosity. A large religious structure that was not a Christian church was for him, almost by default, a mosque — even when it was actually the Templo Mayor.

Likewise, a history major would be able to recognize that Cortés was writing within a genre — an ambitious subject’s letter to a monarch — which tends toward self-promotion. And they would be able to fact check Cortés’ claims against those of other primary and secondary sources. Perhaps they would conduct some exploratory Google searches along the lines of “primary source conquest of Mexico” or “Aztec account of Cortés.” They might also look around for recent secondary sources by searching library catalogues and the footnotes on Cortés’ Wikipedia page and discover Matthew Restall’s revisionist take on the subject.

When history majors encounter LLMs, then, they are already trained to recognize some of the by-now-familiar pitfalls of services like ChatGPT — such as factual inaccuracies — and to address them via skills like fact-checking, analyzing genre and audience, or reading “around” a topic by searching in related sources. Importantly, too, because so many sources are out of copyright and available in multilingual editions on Wikipedia and Wikisource, language models are abundantly trained on historical primary sources in hundreds of different languages.1

For these reasons, I agree with Tyler Cowen that language models are specifically a good thing for historians — but I would go further and say that they are also specifically a good thing for history majors.

On the other hand, I foresee major problems for history teachers and other educators in the short-term. Ted Underwood is right: we professors are going to have to fundamentally rethink many of our assignments. I’ve seen many people dismiss ChatGPT as an essay writing tool because simply plugging in a prompt from an assignment results in a weak piece of writing. But LLMs are all about iterative feedback, and experimenting with well-known prompting methods dramatically improves results.

Here’s an example from one of my own past classes. When given a question from my “Early Modern Europe” survey about how Benvenuto Cellini’s Autobiography illustrates new ways of thinking about identity during the early modern period, GPT-4 can produce dramatically different results depending on the prompt.

Compare this short and scattered effort (which I’d likely give a D- or F) to this pretty decent attempt, which would get around a B+. The difference is the use of roleplaying. In the latter, I tell ChatGPT that it is “an advanced language model that has been trained on prize-winning graduate and undergraduate essays.” I also ask it to start with self-reflection and an outline (which is basically replicating the process a real person would take).

As students get better at finessing prompts in this way, cheating on take-home writing assignments will get far easier.

But this same power of asking LLMs to role-play as specialized versions of themselves also makes them hugely interesting as educational tools in the classroom — and, specifically, as history simulators.

Ea-nāṣir finally gets his due

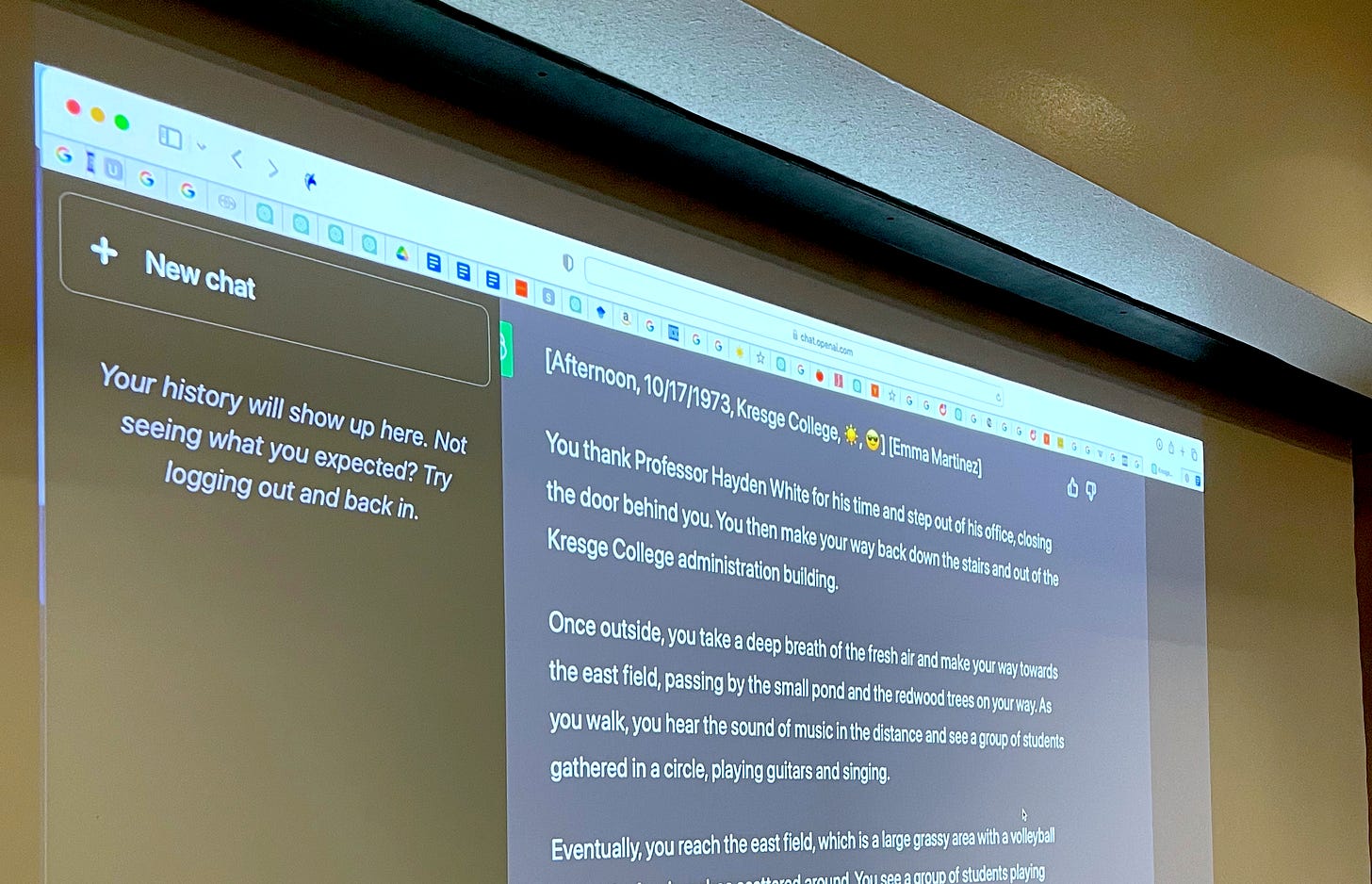

What happens if we tell ChatGPT that it is an advanced history simulator specifically trained to reconstruct historical settings in university classrooms?

This does:

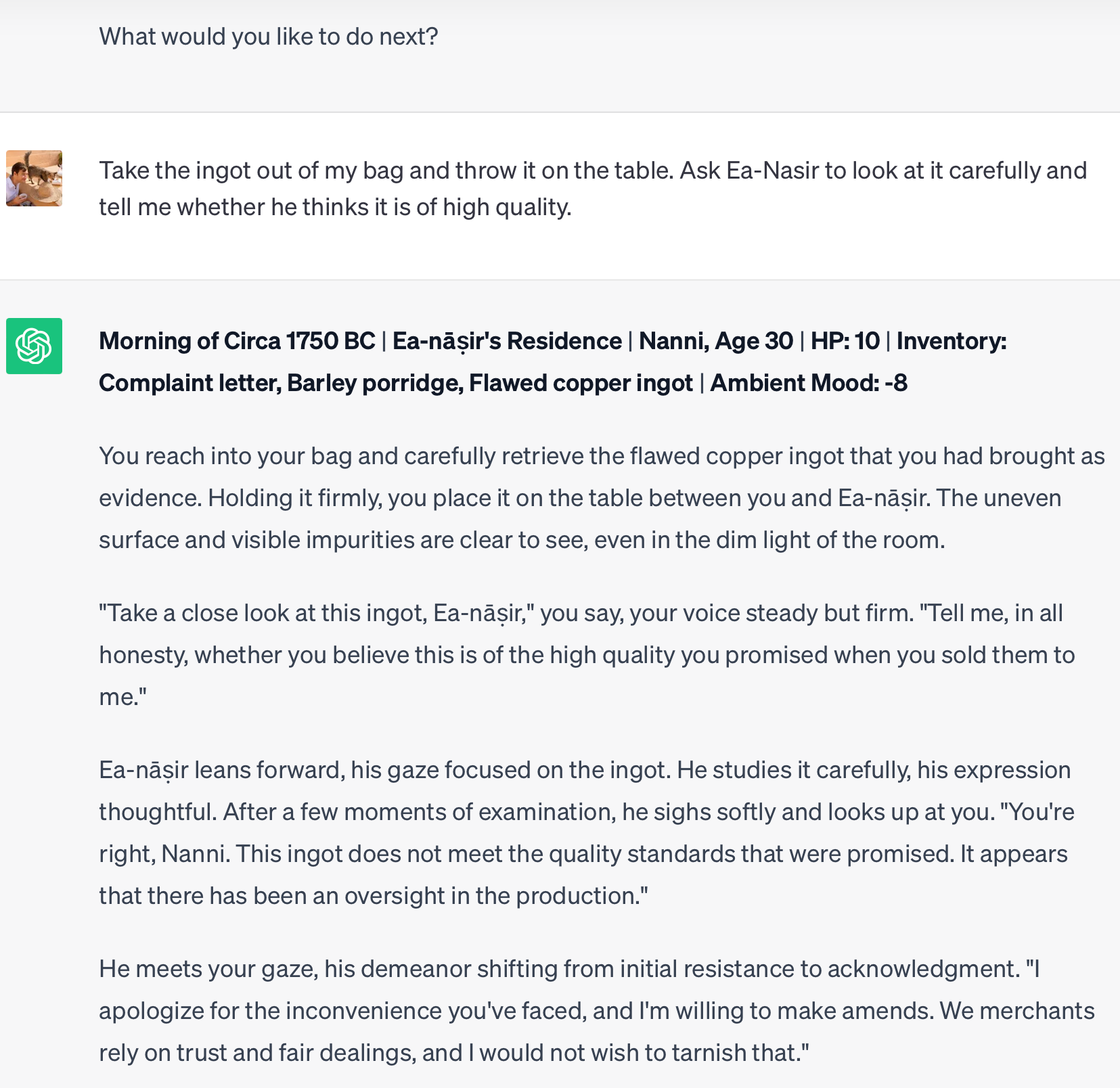

This is a trial run for a scenario I’m developing for a world history class I’ll be teaching this fall. I’m envisioning an assignment in which my students will simulate the experience of being sold flawed copper by Ea-nāṣir, a real-life shady copper merchant in Mesopotamia circa 1750 BCE (one who, in recent years, has unexpectedly become a meme online).

Crucially, this is not just about role-playing as an angry customer of Ea-nāṣir — or as the man himself, which is also an option. As illuminating as the simulations can be, the real benefit of the assignment is in what follows. First, students will print out and annotate the transcript of their simulation (which runs for twenty “turns,” or conversational beats) and carefully read through it with red pens to spot potential factual errors. They will then conduct their own research to correct those errors. They’ll then write their findings up as bullet points and feed this back into ChatGPT in a new, individualized and hopefully improved version of the prompt that they develop themselves. This doesn’t just teach them historical research and fact-checking — it also helps them develop skills for working directly with generative AI that I suspect will be valuable in future job markets.

Finally, the students share their findings with groups of classmates, then write reflection papers that include an account of what they talked about in their groups (which, among other things, makes it difficult to cheat by asking ChatGPT to write the whole thing).

Lessons from “Medieval Plague Simulator”

Back in April of 2023, I tried out a more elaborate assignment in which students in my medieval history class simulated a day in the life as characters during the height of the Bubonic Plague epidemic in 1348, living in one of three places: Damascus, Paris, or Pistoia, an Italian city-state.

Below are links to the relevant Google Docs. I invite you to try it out yourself. Just click on one of these links, then copy and paste the highlighted prompt into ChatGPT and go from there. (If ChatGPT screws something up, just click “regenerate results” until you get a better response.) Beneath each prompt, I’ve included a transcript of a “trial run” of the simulation so you can see how it works.

• Medieval Plague Simulator: Damascus Edition 🕌📿

You are a traveler passing through Damascus during the height of plague, staying at a crowded caravanserai... and you wake up with a scratchy throat.

• Medieval Plague Simulator: Parisian Quack Edition ⚗️

You are a somewhat disreputable apothecary — a seller of cures and possibly counterfeit drugs known as a “quack” — trying to survive and profit off the 1348 plague epidemic in Paris.

• Medieval Plague Simulator: Pistoia Edition 🎭📜

You are an upstanding city councilor in the medieval Italian city-state of Pistoia doing your best to navigate between the city's different interest groups, guilds, and wealthy families to negotiate a civic response to the plague.

Students were asked to compare their simulated experience to real historical accounts of the plague in each of those three places. They were then asked to write a paper with the following guidelines:

This 3-4 page paper should focus on analyzing and reflecting on the accuracy of the simulation. In your paper, you should consider what the simulation got right and wrong, what it emphasized and neglected, and what you learned from fact-checking it. To get started, take notes during the simulation itself (what terms or words are used you don't recognize? What strikes you as anachronistic? What questions do you have?). Afterward, consider how the simulation represented the historical time period and how it portrayed different aspects of daily life. Think about the virtual characters and environments generated by the simulation. Then begin researching the actual setting and some of the terms you wrote down in your notes via JSTOR, Google Scholar etc.

When writing your reflection paper, focus on critical thinking and analysis rather than simply summarizing your experience with the simulation. Be sure to cite at least four scholarly secondary sources relating to your chosen scenario as you reflect on the what the simulation got right and wrong.

Earlier in the same class, I had students simulate life as a medieval peasant. This was more of a trial-run, without an accompanying assignment, but you can see that prompt and try it out yourself here. This was an interesting learning experience for me because it was the first time I experimented with asking ChatGPT to randomize the location (it really likes dropping you in medieval England or France, surprisingly often as a peasant girl named Isabelle).

In both cases, I was blown away by student engagement and creativity. Here’s a brief list of what some of my students did in their medieval simulations:

• ran away from home to become an apprentice to a traveling spice merchant

• developed various treatments for the plague, some historically accurate (like theriac) others much less so (like vaccines)

• negotiated complex legal settlements between the warring guilds of Pistoia

• fled to the forest and became an itinerant hermit

• attempt to purchase “dragons blood,” a genuine medieval and early modern remedy, to cure their fast-worsening plague

• made heroic efforts as an Italian physician named Guilbert to stop the spread of plague with perfume

• became leaders of both successful and unsuccessful peasant revolts

Student engagement in the spring quarter, when I began these trials, was unlike anything I’ve seen. The first time I tested the idea out informally (asking students to simulate their home town via an up-ended general purpose simulation prompt) I realized that we had gone 5 minutes past the end of class without anyone noticing!

An unexpected positive of this assignment was that it particularly seemed to engage students who had previously been sitting in the back rows looking bored. Engaging students like this is a perennial issue for teachers, and not one I’ve found easy to solve. Randomly calling on people in the back can often make it worse by ramping up anxiety. The medieval manor and plague simulator assignments worked wonders in terms of sparking enthusiasm among previously disengaged students.

That said, there were some issues with my first iteration of the simulation. You can get a sense of them in my student feedback from that class. There was a lot of praise for the simulation idea, which I called “History Lens” because it provides a distorted perspective on the past:

"The plague simulation History Lens assignment was a great project that allowed us to experience what life was like during the time.”

"Big big fan of the plague simulator/history lens game, I think that has a ton of potential and I hope I see it in more classes in the future."

“The instructor helped me feel engaged with the course very frequently because he used assignments and activities to allow the class to not just learn about history but to let us see through the eyes of the people during that time. An example of this was the ChatGPT History Lens assignment.”

But also this:

“For the plague assignment it was rather absurd to rate chatgpt on historical accuracy. Someone said their simulation contained a talking rat.”

Not ideal!

Going forward, my plan is to develop my own web app which will allow users to create historical simulations on a dedicated platform using the APIs of both Anthropic’s Claude and GPT-4. Both of these options have or will have larger context windows which will allow the AI to be fed far more detailed primary sources. My hope is that this, plus better directions and rules, will help with what we might call the “talking rat problem”: when the simulation is so blatantly wrong that an assignment built around fact-checking and contextualizing it just becomes an exercise in absurdity.

I will be writing a writing a sequel to this post in October with the results of my new and improved history simulation assignment and prompts, and some further thoughts on LLMs in education (including the cheating issue).

In the meantime, I would be thrilled if to get a community going of other people interested in this. Please try the prompts I’ve linked above (or the others available below) and let me know in the comments how it went. If you are doing anything similar or are interested in implementing this in your classroom, please let me know. I’d love to compare notes.

Other simulated settings to try:

Click one of the links below, then copy and paste the initial prompt I entered into ChatGPT to get your own version started. Or make your own with a relevant primary source via Fordham’s Internet History Sourcebooks Project or other collections of historical texts.

䷙ The Fall of the Ming Dynasty (Nanjing, May, 1645)2

🌋 Voyage of the Beagle (Galápagos Islands, December 17, 1835)

🪆Nixon-Khrushchev “Kitchen Debate” (Moscow, July 24, 1959)

🫥 Simulate your hometown (Location and date up to you)

Artifact of the Week

Weekly Links

• The Bees of Childiric, explained.

• Using GPT-4 to measure the passage of time in fiction.

• Scientists re-create recipe for Egyptian mummification balm.

• How Tycho Brahe really died (it was either a burst bladder or mercury poisoning).

If you’d like to support my work, please pre-order my forthcoming book Tripping on Utopia: Margaret Mead, the Cold War, and the Troubled Birth of Psychedelic Science or share this newsletter with friends you think might be interested.

I always welcome comments, but especially so this for this — really curious to see what people think of this idea and what scenarios they come up with.

This might explain why ChatGPT is so much worse at writing modern poetry (which is tightly restricted by copyright law) than it is at writing in older styles. For instance, it seems to me to be much better at writing a Samuel Johnson essay about kangaroos than it is at writing a modernist poem about same.

This one is different from the others — whereas the other settings all use the same “base” historical simulation prompt, I experimented with customizing the Fall of the Ming simulation in a couple ways, making it multiple choice and adding a “political intrigue minigame” that involves displays of rhetoric and scholarly learning. I think that customizing a setting in this way is probably the most effective way forward for using this in the classroom, especially when it’s possible to add tens of thousands of words of background information about the setting to assist with world-building.

> This might explain why ChatGPT is so much worse at writing modern poetry (which is tightly restricted by copyright law) than it is at writing in older styles. For instance, it seems to me to be much better at writing a Samuel Johnson essay about kangaroos than it is at writing a modernist poem about same.

No, you've simply run into the RLHF mode collapse problem (https://www.lesswrong.com/posts/t9svvNPNmFf5Qa3TA/mysteries-of-mode-collapse?commentId=tHhsnntni7WHFzR3x) interacting with byte-pair encoding (https://gwern.net/gpt-3#bpes). A GPT doesn't genuinely understand phonetics due to the preprocessing of the data destroying individual-letter information and replacing them with large half-word-sized chunks, and then during RLHF, it avoids writing anything which doesn't make use of the memorized pairs of rhymes because it's unsure what rhyming or nonrhyming poetry looks like.

(If you are skeptical, try asking ChatGPT this simple prompt: "Write a nonrhyming poem." a dozen times and count how many times it actually does so rather than rhyming. Last time I checked, the success rate was still well under 20%.)

The Ea-Nasir screenshot also shows the effects of the RLHF, I suspect. My advice would be to minimize use of GPT in favor of Claude for all historical scenarios involving anything less wholesome than _Barney & Friends_. While the model is not as good, the RLAIF of Claude seems to be a good deal less indiscriminate & crippling.

This is fascinating - thank you. Hard not to wonder whether the talking rat has been borrowed by ChatGPT from the BBC's Horrible Histories franchise.